NO FUN STUDIO X LA CASA ARTHOUSE X BOBBLEHAUS

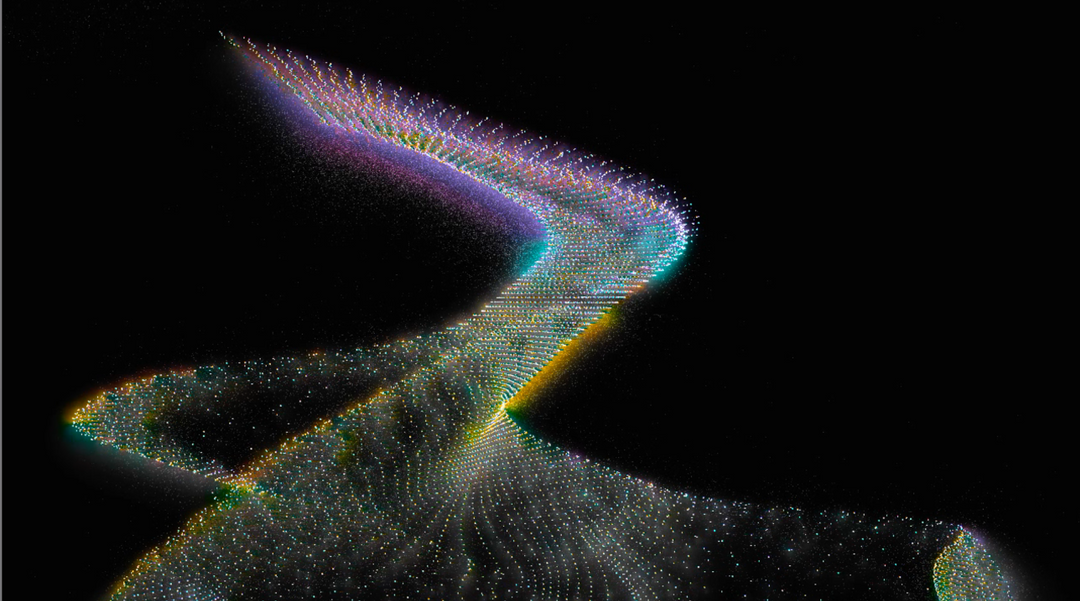

The Secret Garden is the first project publicly displayed under my company, No Fun Studio, utilizing dual projection mapping & body tracking depth sensors to bring life to this immersive space in the heart of Williamsburg, Brooklyn.

La Casa Arthouse

Bobblehaus

The Secret Garden

DEC. 2020 — JAN. 2020

The Secret Garden explores a form of augmented reality where the user interacts with their reflection in a flowing particle simulation, building Delaunay triangulations that resemble the structure of plants from the position of their hands. The floor projection controls interaction using aerial blob tracking and a physics engine to displace particles based off momentum & velocity dispersing the interactive meadow at their feet. I developed this realtime project in TouchDesigner with the art direction of Gonzo Gelso.